FROM PROMISE TO PROOF

Rolling out an AI strategy in educational settings needs careful thought, a degree of caution and constant feedback from teachers. Richard Human reports.

The memory of pristine iPads gathering dust in school cupboards haunts any educational technology initiative. These expensive promises of transformation became cautionary tales about implementing technology without thoughtful planning or realistic expectations. This kind of experience has shaped both our deliberately restrained approach to AI implementation across the Globeducate family of schools around the world, and also our choice to prioritise meaningful teacher training and manageable curriculum development whilst everything else waits.

Six months after our AI strategy launch, the evidence suggests this human-centred, systematic approach was well-advised as we sought feedback from our 70+ schools around the world.

AI Champions Network

Rather than pursuing a single AI solution, we implemented seven complementary initiatives with an initial focus on supporting AI Champions in each school setting to provide distributed leadership and then linking them to each other to establish a peer support network.

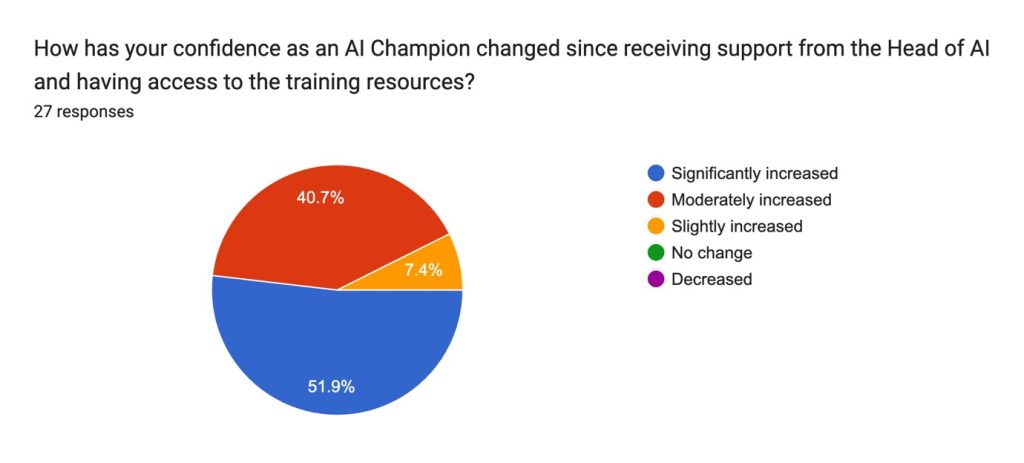

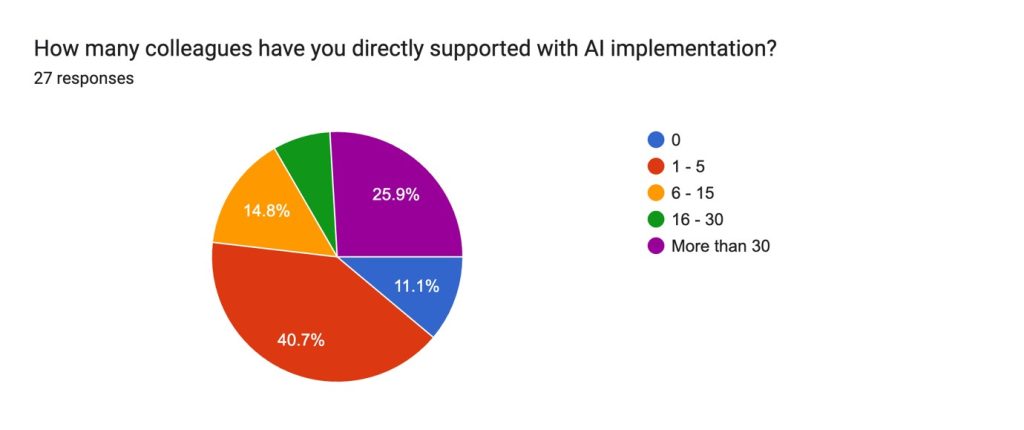

Survey data a few months in suggests the champions had grown in confidence to such an extent that almost all had felt able to start supporting their colleagues actively.

Multi-pronged follow up

With the champions network in place, we felt we could move forward in a number of other ways. Firstly, we established an online AI Literacy course for all staff, which is now live on Attensi, providing structured learning pathways that complement Champion support for staff across all our schools.

As staff learned more, we have started a programme of Curriculum Development in a measured way, writing six manageable 40-minute lesson plans for 12–14-year-olds, which nearly half of our schools are now implementing across year groups or piloting with selected classes.

Meanwhile, a Teachmate AI Pilot has been set up, with 12 schools actively trialling practical classroom applications and testing real-world implementation scenarios, while over 40 schools are participating in a Hi-AI Research Project, an evidence-based development with Elpis Anastasiou, creating a substantial foundation for future decisions.

Four applicants submitted project proposals as part of our Innovation Hub initiative, working on specific development projects to address unique classroom challenges, from report writing to multilingual learning support with AI.

Finally, we are developing a set of comprehensive resources and have kept up the communication across the group, writing weekly blogs, creating template libraries, multi-language materials, and AI prompt collections to support implementation across diverse contexts.

Measured success

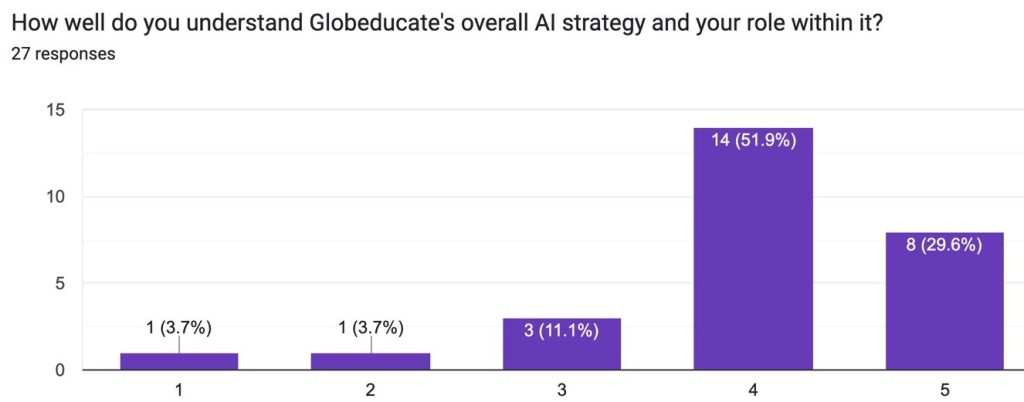

Champions are now growing into their role: they like the materials and when surveyed, report a growing understanding of the group’s AI strategy and their role within it.

Qualitative feedback also suggests our approach is beginning to have an impact in important ways. One secondary teacher reduced report writing time from 2.5 days to 0.5 day after Champion training. Others are clearly motivated by the Champions lead in department-wide sessions, with peer-to-peer contact proving to be particularly energising,

External validation supports our approach. Our systematic, evidence-based approach directly addresses gaps identified in Ofsted’s comprehensive study, which concluded that “the biggest risk is doing nothing” regarding AI in education.

Honest assessment of challenges

Survey feedback, however, also reveals that implementation is challenging One Champion noted the role is “very demanding time-wise and takes a significant toll on my day-to-day job requirements.” Champions are requesting more time to do their work and for paid AI tool access.

The evidence base remains limited. Our 27 survey responses across 70+ schools represent a small sample. We lack student outcome data and face early-stage challenges as the academic year begins. Innovation Hub projects remain in feasibility stages rather than proven implementations.

Sustainability questions persist around Champion workload balance, scaling support structures across multiple platforms, and managing expectations. As one Champion noted, “Sometimes instructions given from the top [have felt] like pie in the sky mandates,” though some have voiced appreciation for leadership that translates these into “feasible step-by-step approaches.”

Strategic choices

Schools now face pathway decisions based on readiness and capacity. The Foundation Track, for example, focuses entirely on teacher-facing AI applications with direct training support, building expertise systematically whilst avoiding the complexity of simultaneous teacher and student implementations.

The Expansion Track, on the other hand, pursues both teacher-facing and student-facing approaches, with Champions leading student applications locally. This recognises that some schools have developed sufficient capacity for more complex implementation.

Future development requires addressing Champion requests for advanced training, tackling school challenges through automation and AI-assisted coding. They want practical tools immediately usable for class mixing, report writing, and performance tracking.

Evidence-Based Validation

We have been careful to align our strategy with external frameworks validating educational technology implementation, firstly taking note of EY-Parthenon’s analysis that warns of failed technology adoptions due to poor planning. Professor Rose Luckin’s UCL research emphasises evidence-based implementation, human intelligence primacy, and systematic ethical considerations – principles embedded throughout our strategy.

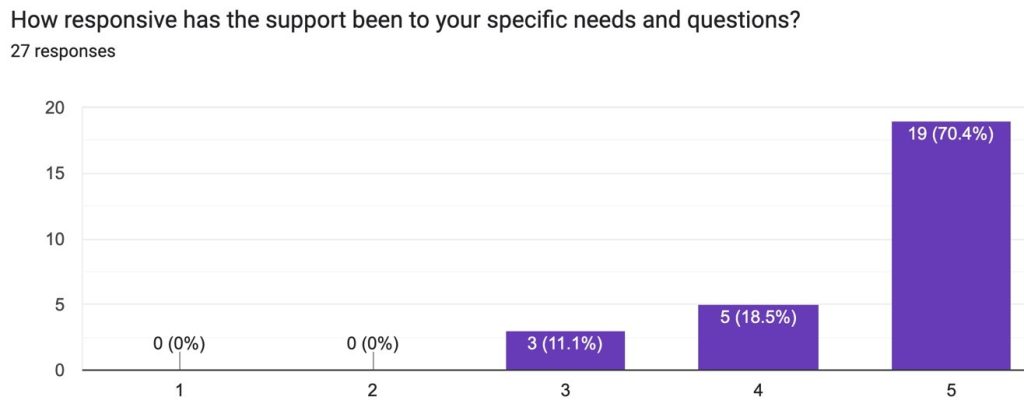

Significantly, 70% of Champions rated support as “very responsive” to specific needs, suggesting we’ve created genuine value rather than additional burden.

Looking forward

Can we sustain what seems to be a promising start? The strategy will have to come through the test of a full academic year with all the pressure it brings in multiple school contexts in order to scale effectively.

We’ve avoided the trolley syndrome not by avoiding technology, but by approaching the challenge of AI with humility and the kind of systematic thinking that educational transformation requires. The evidence so far shows measured progress toward the use of a new technology that genuinely enhances human potential rather than creates new burdens.

Yet we remain at the beginning. Success will ultimately be measured not by technical achievements but by educational outcomes: students more engaged, teachers more effective, and learning environments more inclusive and supportive. Early indicators suggest we’re building toward those goals whilst establishing foundations for continued innovation in educational practice.

The best educational technology becomes invisible – teachers forget it’s technology at all. We’re not there yet, but we’re building systematically toward it.

Richard Human is the Head of AI at Globeducate

FEATURE IMAGE: by Philip Oroni For Unsplash+

Support Images & Graphics: Thank you to Richard